Research

Morphoacoustics is a research area that explores the inter-relationship between physical structure, acoustical properties, and perception. We are currently investigating three different phenomena:

For each phenomenon above, the three attributes of physical structure, acoustical properties, and perception are integrally related in a complex way. Therefore, a key component of morphoacoustics research is the ability to understand and predict how changes in physical structure result in changes to acoustical properties, which in turn result in changes to perception.

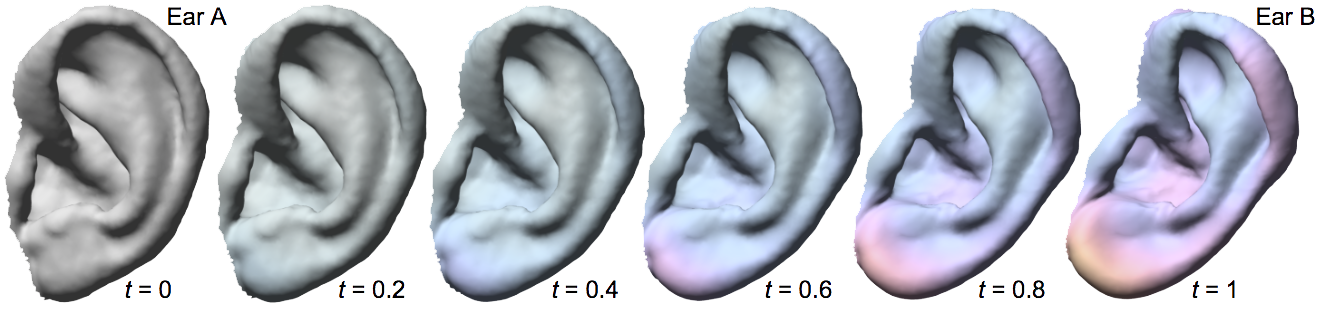

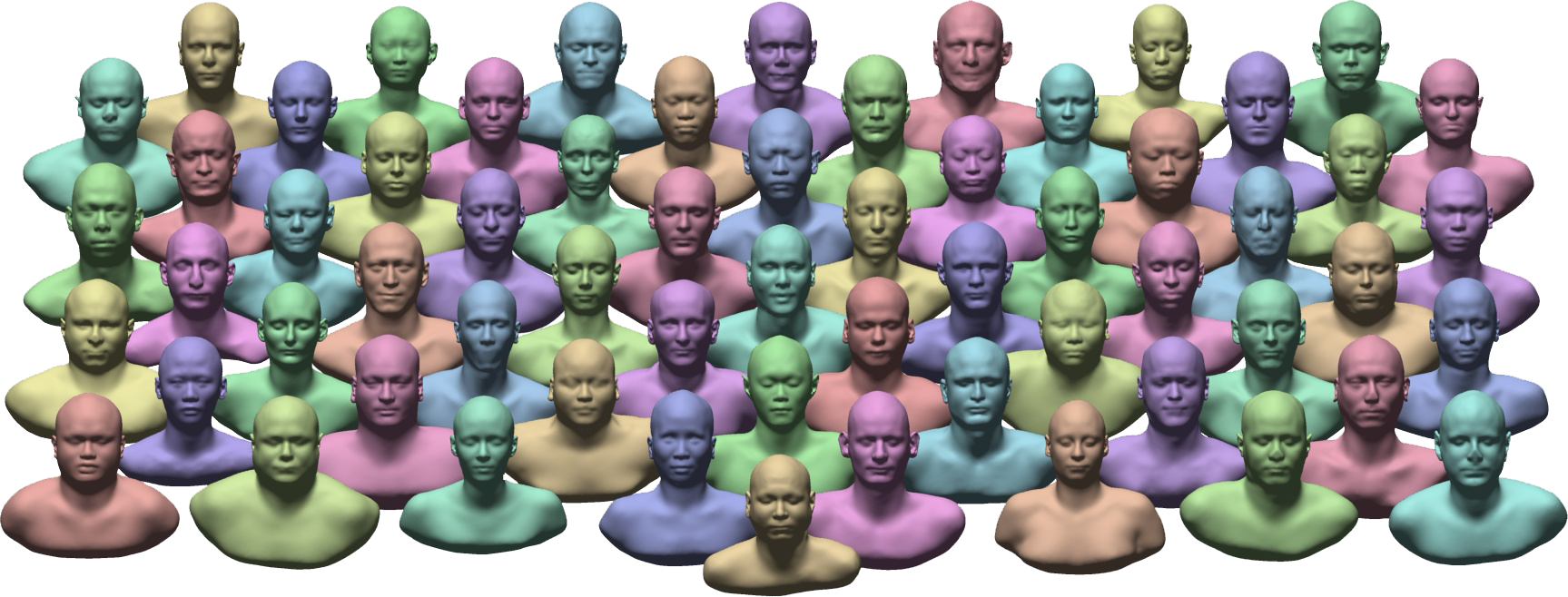

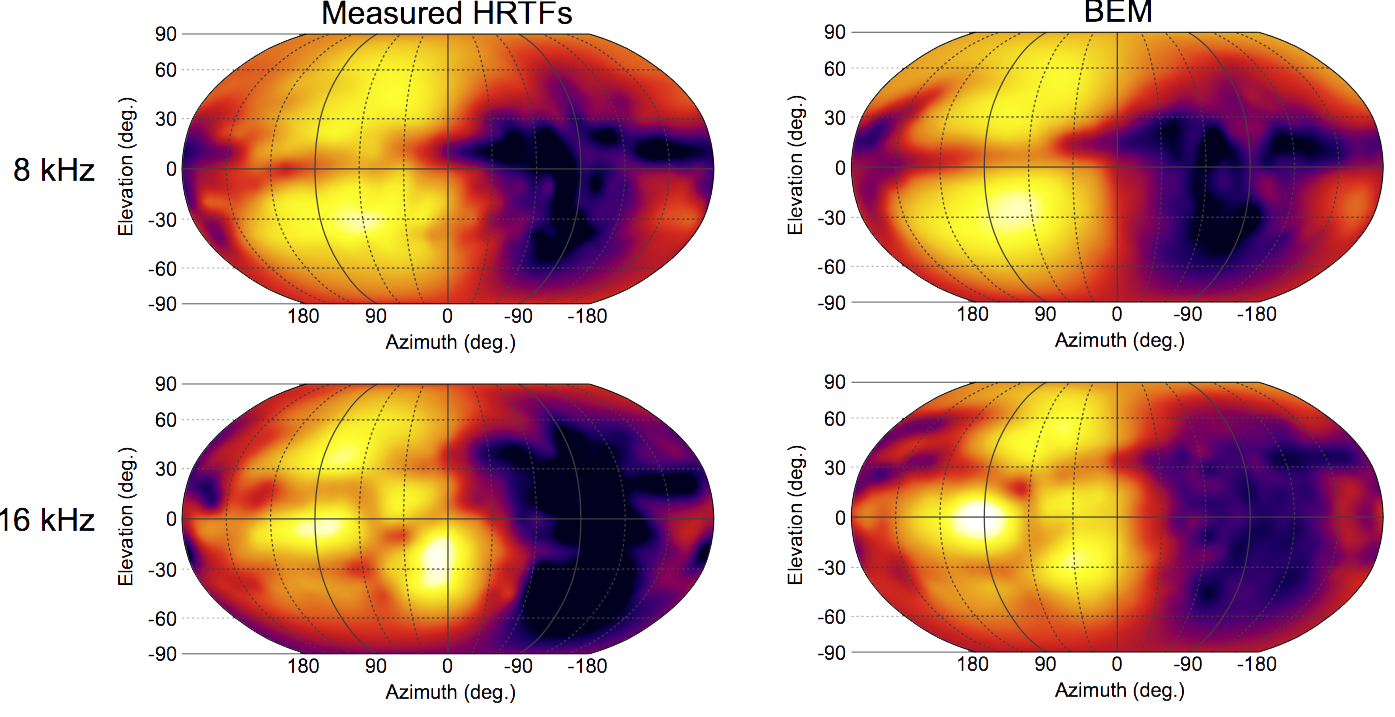

The research approach we are taking first requires that we can mathematically model deformations in each of the three attributes. For example, consider the phenomenon of spatial hearing in which our outer ears enable us to perceive the location of sounds in space. Outer ears vary in shape across different listeners - so how do we model these shape variations? Each outer ear at a given frequency has a unique directivity pattern - how do we model these variations in the acoustic directivity patterns? The directivity patterns of the outer ears influences the perception of both sound localization and sound timbre - how do we model variations in the perception of localization and timbre?

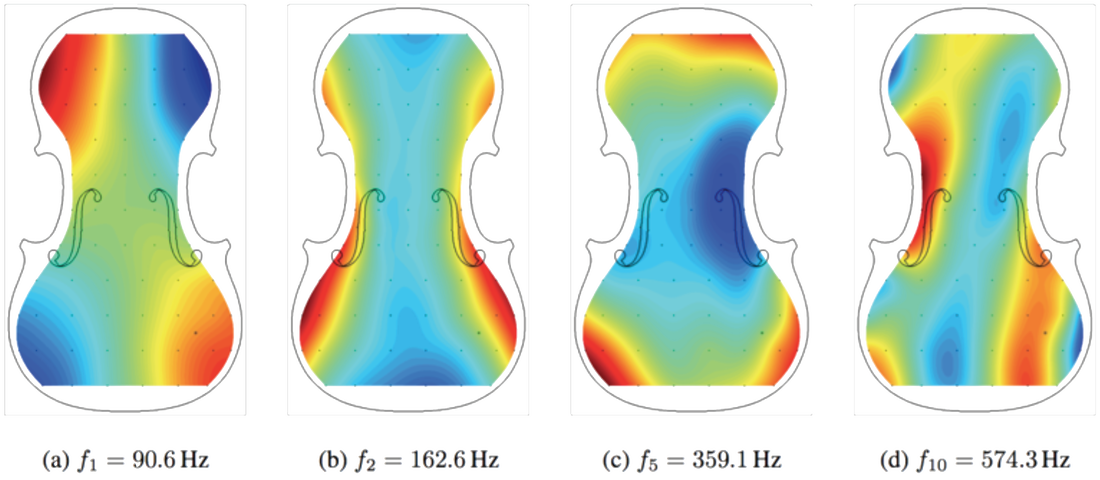

In addition to requiring suitable mathematical models of deformations in the three different attributes, we also require appropriate numerical simulation tools that enable us to predict the consequences of deformations. For example, even if we can deform an ear shape mathematically, how can we understand what changes occur in the acoustic directivity patterns? Similarly, if we can model changes in acoustic directivity patterns, how can we understand what changes occur in perception? To this end, we must develop accurate simulations tools.

We have found that our research approach requires new tools and new data. Please read on if you have an interest!

- 1. Human outer ears and spatial hearing

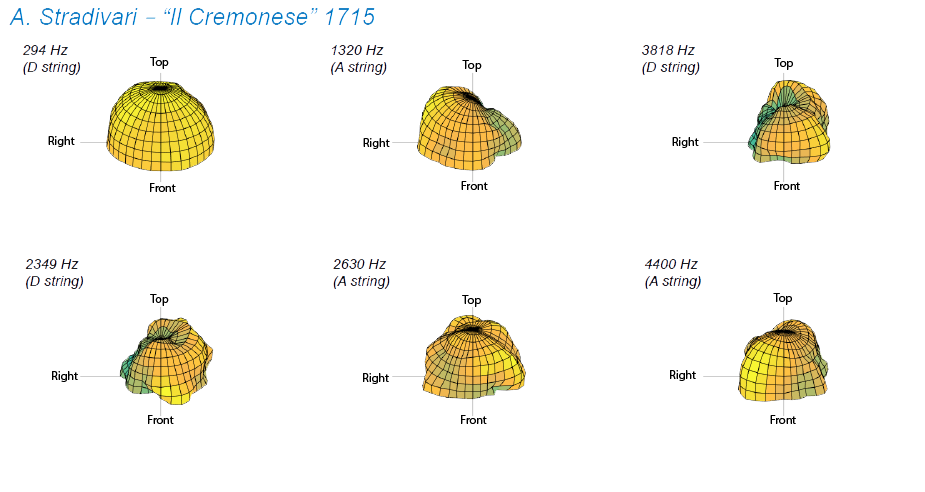

- 2. Violins and their acoustic radiance patterns

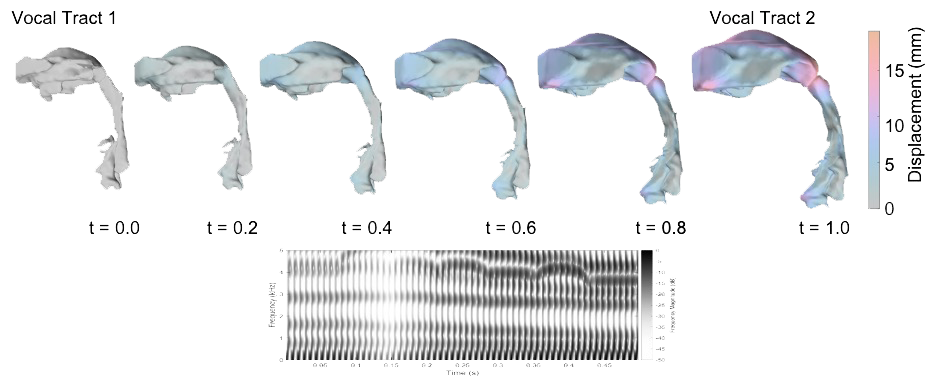

- 3. Human vocal tract and speech

For each phenomenon above, the three attributes of physical structure, acoustical properties, and perception are integrally related in a complex way. Therefore, a key component of morphoacoustics research is the ability to understand and predict how changes in physical structure result in changes to acoustical properties, which in turn result in changes to perception.

The research approach we are taking first requires that we can mathematically model deformations in each of the three attributes. For example, consider the phenomenon of spatial hearing in which our outer ears enable us to perceive the location of sounds in space. Outer ears vary in shape across different listeners - so how do we model these shape variations? Each outer ear at a given frequency has a unique directivity pattern - how do we model these variations in the acoustic directivity patterns? The directivity patterns of the outer ears influences the perception of both sound localization and sound timbre - how do we model variations in the perception of localization and timbre?

In addition to requiring suitable mathematical models of deformations in the three different attributes, we also require appropriate numerical simulation tools that enable us to predict the consequences of deformations. For example, even if we can deform an ear shape mathematically, how can we understand what changes occur in the acoustic directivity patterns? Similarly, if we can model changes in acoustic directivity patterns, how can we understand what changes occur in perception? To this end, we must develop accurate simulations tools.

We have found that our research approach requires new tools and new data. Please read on if you have an interest!